Computer plays TicTacToe

Last updated on : 13th March, 2025

This is an attempt towards making a Computer play TicTacToe. I plan to write code for playing the game using Rule-based logic, Q-Learning Algorithm and taking a mix of search + RL algorithms (the alphazero way). I am currently exploring RL right now and I intend to do it using projects, this one is the first attempt at it.

The code is public and available on Github : github.com/prajwxlk/computer-plays-tictactoe.

TicTacToe is a simple game which we have all played in childhood and it’s a good choice for implementing Reinforcement Learning as it is simple, with precise rules and the number of constraints are limited. This makes it easier for us to better understand the concepts which we are trying to implement.

Rules for Playing Tic-Tac-Toe :

- Game Setup :

- The game is played on a 3×3 grid.

- There are two players: one uses X, and the other uses O.

- Players take turns placing their symbol (X or O) in an empty square.

- How to Win :

- A player wins if they place three of their symbols in a row (horizontally, vertically, or diagonally).

- If all nine squares are filled and no player has three in a row, the game is a draw.

- Game Rules •

- Players alternate turns, starting with the X player.

- A player can only place their symbol in an empty square.

- The game ends immediately when a player wins or the grid is full (draw).

Initially, we start with rule-based logic and see how good it is for a game like TicTacToe. This shall work fine initially as we are starting with a 3x3 grid but later once we have well implemented this for a 3x3 grid. We shall scale this up to larger numbers like 10x10 grid. This is where, rule-based logic will require enormous computing resources as the possible valid games will go from 255168 (in case of 3x3) upwards to 10^80 to 10^100 (in case of 10x10) which will be impossible to calculate as it is greater than the estimated atoms in the observable universe (~10⁸⁰)!

We have now implemented rule-based logic in the code for the 3x3 grid. The “Smart” strategy is are basically 5 simple rules to follow to make the right move.

The Smart Strategy Rules :

- If you can win in the next step, mark the winning block.

- If the opponent is winning in the next step, mark the block which blocks the opponent from winning.

- If the center block is available, mark it.

- If a corner block, is available - mark it.

- If none of the above moves are available, mark a side block

Each move of the game is tested sequentially against the above rules, whichever rule fits the condition best is implemented. If no rule can be selected, it’s most likely going to be a draw.

This simple smart strategy allows for us to make the best moves in the game right now in a 3x3 grid but if we were to now scale up this grid from 3x3 to 5x5 or 10x10 it will heavily increase the amount of compute that will be required to calculate these possibilities.

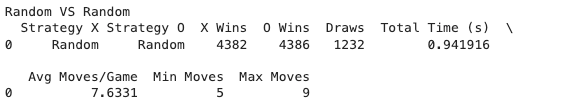

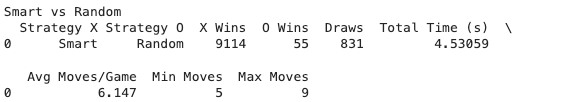

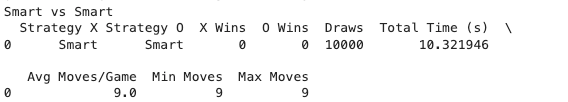

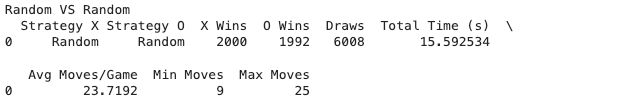

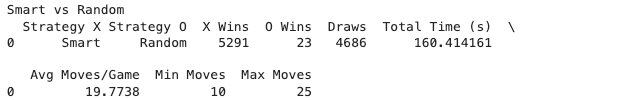

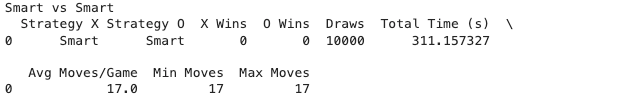

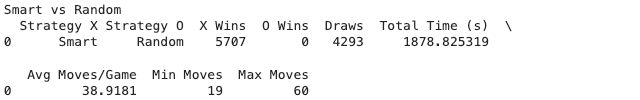

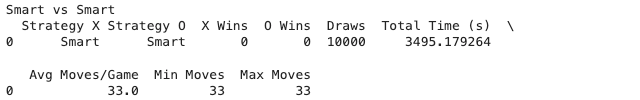

We 10,000 simulations for Random vs Random Strategy, Smart vs Random Strategy and Smart vs Smart Strategy using self play. The simulation results are as follows :

We can make several observations looking at the stats provided in the results.

- It seems that a minimum number of moves to forfeit or draw a match are 5 while the maximum number of moves can be only 9 as that is the number of blocks in the grid (3x3 Grid).

- Random vs Random Strategy is taking least time 0.9 seconds while Smart vs Smart Strategy is taking the most time 10.32 seconds. This seems reasonable as the random strategy is marking blocks randomly from the available moves while the smart strategy has to test several conditions to choose the best move from the moves available to them.

- It is fun to see that in Smart vs Random strategy, the Random strategy still is able to win a few games (55 Wins) even while making random moves though the number is significantly low.

- Smart vs Smart Strategy shows that since both of the players are making moves smartly all of the games end up being draws. I would like to see how this might change if it does in the case of a larger grid. Probably, the player with the most computer would win in that situation if they both follow the same logic.

Now let’s implement a 5x5 grid (5x5 grid instead of 10x10 as i can run these simulations locally on my device without having to rent a GPU yet, we can experiment with larger grids later) and see how the current rule-based logic is able to perform when the number of blocks increase as we scale the grid.

We have implemented and simulated 10k games for 5x5 grid as well as 9x9 grid. My machine was able to perform well enough to perform these calculations so I went forth with implementing them. Let’s make some observations based on the simulations we just ran.

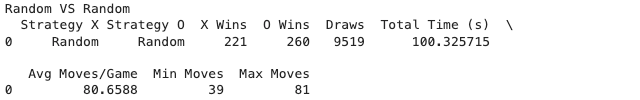

10,000 Games of 5x5 Grid :

Based on the above stats, we can make a few observations :

- We can see like in 3x3 grid, the amount of time required for Random vs Random strategy is least while Smart vs Smart is the most. This is to honest was expected due to their nature of implementation.

- Whenever Random strategy is involved, the maximum moves in the game end up being the 5x5 = 25. Therefore, covering the entire grid and drawing the matches.

- The number of moves required significantly drop as we adopt using smart strategy for each player but we need to note the fact that this comes at a severe time disadvantage as using smart strategy ends up leading to taking upto 20 times more time!

- We still can see the Smart vs Smart strategies all result into a draw even in 5x5 grid, then in Smart vs Random Strategy we can observe that even with a Random Strategy O is able to win a few number of games (very small number ofc but a win is a win). Lastly, Random vs Random has most of its games resulting into a draw while the wins are roughly equal giving a slight edge to X as it gets to mark the first block in the grid.

In the case of 9x9 Grid, we ran two simulations actually. We tried 1000 games initially and then went up to 10,000 games. The reason we did so was because I did not know how computationally intensive this could be. I am running this code on my Mac Mini M4 and it is really good at crunching these calculations, I tried using Google Colab [Free] but it started taking a lot of time in processing the data. It was way faster on My Mac Mini.

Since, we ran 1k Games and 10k Games, I’ll make my observations only on 10k Games 9x9 grid.

10,000 Games of 9x9 Grid :

Based on the above stats, we can make a few observations :

- We see that as we have increased the size of the grid significantly, the amount of time to compute the 10k games has significantly increased. Random vs Random takes 1 min 40 seconds while Smart vs Smart requires 58 and half minutes approx. When, compared to 3x3 grid that is a 106x, 414x and 338x times more time required respectively to compute 10k games.

- Another fact we can notice as we have scaled the grid, we can see that in Smart vs Random strategy the number of wins of O ends up becoming totally 0. While, in previous grid sizes the number was definitely significantly low we could at least see the random strategy win a few games but it seems since the number of blocks increase we can observe that the chances to win randomly dwindles to 0.

- The minimum number of moves in the game are 33 which can be seen in Smart vs Smart Strategy while the highest number of moves is ofcourse, 81 which is the size of the grid as well and is observed in the Random vs Random strategy.

- We see similar pattern in all the three grid sizes, Smart vs Smart strategies all lead to the same result of all matches being draw. We also see that in case of Random vs Random also, most of the matches end up being a draw while X and O have similar number of wins while giving X a slight advantage as it starts first.

The next step for us is to implement Q-Learning and to see how we can improve our computation requirement and speed. Also, we might be able to see a better strategy being implemented and used in the case of larger grids like 9x9. This will be fun to see the different and new strategies it might come up with. Let’s now go ahead and implement Q-Learning.